Note

Go to the end to download the full example code.

Multiple-kernel ridge¶

This example demonstrates how to solve multiple kernel ridge regression. It uses random search and cross validation to select optimal hyperparameters.

import numpy as np

import matplotlib.pyplot as plt

from himalaya.backend import set_backend

from himalaya.kernel_ridge import solve_multiple_kernel_ridge_random_search

from himalaya.kernel_ridge import predict_and_score_weighted_kernel_ridge

from himalaya.utils import generate_multikernel_dataset

from himalaya.scoring import r2_score_split

from himalaya.viz import plot_alphas_diagnostic

In this example, we use the cupy backend, and fit the model on GPU.

backend = set_backend("cupy", on_error="warn")

/home/runner/work/himalaya/himalaya/himalaya/backend/_utils.py:55: UserWarning: Setting backend to cupy failed: Cupy not installed..Falling back to numpy backend.

warnings.warn(f"Setting backend to {backend} failed: {str(error)}."

Generate a random dataset¶

X_train : array of shape (n_samples_train, n_features)

X_test : array of shape (n_samples_test, n_features)

Y_train : array of shape (n_samples_train, n_targets)

Y_test : array of shape (n_samples_test, n_targets)

n_kernels = 3

n_targets = 50

kernel_weights = np.tile(np.array([0.5, 0.3, 0.2])[None], (n_targets, 1))

(X_train, X_test, Y_train, Y_test,

kernel_weights, n_features_list) = generate_multikernel_dataset(

n_kernels=n_kernels, n_targets=n_targets, n_samples_train=600,

n_samples_test=300, kernel_weights=kernel_weights, random_state=42)

feature_names = [f"Feature space {ii}" for ii in range(len(n_features_list))]

# Find the start and end of each feature space X in Xs

start_and_end = np.concatenate([[0], np.cumsum(n_features_list)])

slices = [

slice(start, end)

for start, end in zip(start_and_end[:-1], start_and_end[1:])

]

Xs_train = [X_train[:, slic] for slic in slices]

Xs_test = [X_test[:, slic] for slic in slices]

Precompute the linear kernels¶

We also cast them to float32.

Ks_train = backend.stack([X_train @ X_train.T for X_train in Xs_train])

Ks_train = backend.asarray(Ks_train, dtype=backend.float32)

Y_train = backend.asarray(Y_train, dtype=backend.float32)

Ks_test = backend.stack(

[X_test @ X_train.T for X_train, X_test in zip(Xs_train, Xs_test)])

Ks_test = backend.asarray(Ks_test, dtype=backend.float32)

Y_test = backend.asarray(Y_test, dtype=backend.float32)

Run the solver, using random search¶

This method should work fine for

small number of kernels (< 20). The larger the number of kernels, the larger

we need to sample the hyperparameter space (i.e. increasing n_iter).

Here we use 100 iterations to have a reasonably fast example (~40 sec). To have a better convergence, we probably need more iterations. Note that there is currently no stopping criterion in this method.

n_iter = 100

Grid of regularization parameters.

alphas = np.logspace(-10, 10, 21)

Batch parameters are used to reduce the necessary GPU memory. A larger value will be a bit faster, but the solver might crash if it runs out of memory. Optimal values depend on the size of your dataset.

n_targets_batch = 1000

n_alphas_batch = 20

If return_weights == "dual", the solver will use more memory.

To mitigate this, you can reduce n_targets_batch in the refit

using `n_targets_batch_refit.

If you don’t need the dual weights, use return_weights = None.

return_weights = 'dual'

n_targets_batch_refit = 200

Run the solver. For each iteration, it will:

sample kernel weights from a Dirichlet distribution

fit (n_splits * n_alphas * n_targets) ridge models

compute the scores on the validation set of each split

average the scores over splits

take the maximum over alphas

(only if you ask for the ridge weights) refit using the best alphas per target and the entire dataset

return for each target the log kernel weights leading to the best CV score (and the best weights if necessary)

results = solve_multiple_kernel_ridge_random_search(

Ks=Ks_train,

Y=Y_train,

n_iter=n_iter,

alphas=alphas,

n_targets_batch=n_targets_batch,

return_weights=return_weights,

n_alphas_batch=n_alphas_batch,

n_targets_batch_refit=n_targets_batch_refit,

jitter_alphas=True,

)

[ ] 0% | 0.00 sec | 100 random sampling with cv |

[ ] 1% | 1.54 sec | 100 random sampling with cv | 0.65 it/s, ETA: 00:02:32

[ ] 2% | 2.75 sec | 100 random sampling with cv | 0.73 it/s, ETA: 00:02:14

[ ] 3% | 3.71 sec | 100 random sampling with cv | 0.81 it/s, ETA: 00:01:59

[. ] 4% | 4.77 sec | 100 random sampling with cv | 0.84 it/s, ETA: 00:01:54

[. ] 5% | 5.77 sec | 100 random sampling with cv | 0.87 it/s, ETA: 00:01:49

[. ] 6% | 7.08 sec | 100 random sampling with cv | 0.85 it/s, ETA: 00:01:50

[.. ] 7% | 7.96 sec | 100 random sampling with cv | 0.88 it/s, ETA: 00:01:45

[.. ] 8% | 8.99 sec | 100 random sampling with cv | 0.89 it/s, ETA: 00:01:43

[.. ] 9% | 10.06 sec | 100 random sampling with cv | 0.89 it/s, ETA: 00:01:41

[... ] 10% | 11.32 sec | 100 random sampling with cv | 0.88 it/s, ETA: 00:01:41

[... ] 11% | 12.60 sec | 100 random sampling with cv | 0.87 it/s, ETA: 00:01:41

[... ] 12% | 13.97 sec | 100 random sampling with cv | 0.86 it/s, ETA: 00:01:42

[... ] 13% | 15.06 sec | 100 random sampling with cv | 0.86 it/s, ETA: 00:01:40

[.... ] 14% | 16.04 sec | 100 random sampling with cv | 0.87 it/s, ETA: 00:01:38

[.... ] 15% | 17.04 sec | 100 random sampling with cv | 0.88 it/s, ETA: 00:01:36

[.... ] 16% | 17.99 sec | 100 random sampling with cv | 0.89 it/s, ETA: 00:01:34

[..... ] 17% | 18.75 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:01:31

[..... ] 18% | 19.69 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:01:29

[..... ] 19% | 20.83 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:01:28

[...... ] 20% | 21.83 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:01:27

[...... ] 21% | 22.86 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:01:26

[...... ] 22% | 23.94 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:01:24

[...... ] 23% | 24.55 sec | 100 random sampling with cv | 0.94 it/s, ETA: 00:01:22

[....... ] 24% | 25.75 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:21

[....... ] 25% | 26.89 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:20

[....... ] 26% | 28.11 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:01:20

[........ ] 27% | 29.28 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:01:19

[........ ] 28% | 30.11 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:17

[........ ] 29% | 31.18 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:16

[......... ] 30% | 32.39 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:15

[......... ] 31% | 33.49 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:14

[......... ] 32% | 34.56 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:13

[......... ] 33% | 35.63 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:12

[.......... ] 34% | 36.80 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:01:11

[.......... ] 35% | 37.73 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:10

[.......... ] 36% | 38.91 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:09

[........... ] 37% | 39.90 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:07

[........... ] 38% | 41.10 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:01:07

[........... ] 39% | 42.35 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:01:06

[............ ] 40% | 43.39 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:01:05

[............ ] 41% | 44.51 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:01:04

[............ ] 42% | 45.24 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:02

[............ ] 43% | 46.36 sec | 100 random sampling with cv | 0.93 it/s, ETA: 00:01:01

[............. ] 44% | 47.82 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:01:00

[............. ] 45% | 48.81 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:59

[............. ] 46% | 49.90 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:58

[.............. ] 47% | 51.08 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:57

[.............. ] 48% | 52.24 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:56

[.............. ] 49% | 53.55 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:55

[............... ] 50% | 54.40 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:54

[............... ] 51% | 55.47 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:53

[............... ] 52% | 56.46 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:52

[............... ] 53% | 57.78 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:51

[................ ] 54% | 58.88 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:50

[................ ] 55% | 60.01 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:49

[................ ] 56% | 61.14 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:48

[................. ] 57% | 62.37 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:47

[................. ] 58% | 63.39 sec | 100 random sampling with cv | 0.92 it/s, ETA: 00:00:45

[................. ] 59% | 64.49 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:44

[.................. ] 60% | 65.77 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:43

[.................. ] 61% | 66.85 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:42

[.................. ] 62% | 68.22 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:41

[.................. ] 63% | 69.42 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:40

[................... ] 64% | 70.54 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:39

[................... ] 65% | 71.74 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:38

[................... ] 66% | 72.63 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:37

[.................... ] 67% | 73.79 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:36

[.................... ] 68% | 75.00 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:35

[.................... ] 69% | 76.23 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:34

[..................... ] 70% | 77.37 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:33

[..................... ] 71% | 78.44 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:32

[..................... ] 72% | 79.55 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:30

[..................... ] 73% | 80.63 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:29

[...................... ] 74% | 81.81 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:28

[...................... ] 75% | 82.84 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:27

[...................... ] 76% | 84.26 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:26

[....................... ] 77% | 85.41 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:25

[....................... ] 78% | 86.59 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:24

[....................... ] 79% | 87.62 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:23

[........................ ] 80% | 88.69 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:22

[........................ ] 81% | 89.77 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:21

[........................ ] 82% | 90.89 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:19

[........................ ] 83% | 92.19 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:18

[......................... ] 84% | 93.07 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:17

[......................... ] 85% | 94.00 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:16

[......................... ] 86% | 95.19 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:15

[.......................... ] 87% | 96.24 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:14

[.......................... ] 88% | 97.37 sec | 100 random sampling with cv | 0.90 it/s, ETA: 00:00:13

[.......................... ] 89% | 98.32 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:12

[........................... ] 90% | 99.31 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:11

[........................... ] 91% | 100.55 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:09

[........................... ] 92% | 101.62 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:08

[........................... ] 93% | 102.52 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:07

[............................ ] 94% | 103.41 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:06

[............................ ] 95% | 104.47 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:05

[............................ ] 96% | 105.47 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:04

[............................. ] 97% | 106.64 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:03

[............................. ] 98% | 107.68 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:02

[............................. ] 99% | 108.56 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:01

[..............................] 100% | 109.45 sec | 100 random sampling with cv | 0.91 it/s, ETA: 00:00:00

As we used the cupy backend, the results are cupy arrays, which are

on GPU. Here, we cast the results back to CPU, and to numpy arrays.

deltas = backend.to_numpy(results[0])

dual_weights = backend.to_numpy(results[1])

cv_scores = backend.to_numpy(results[2])

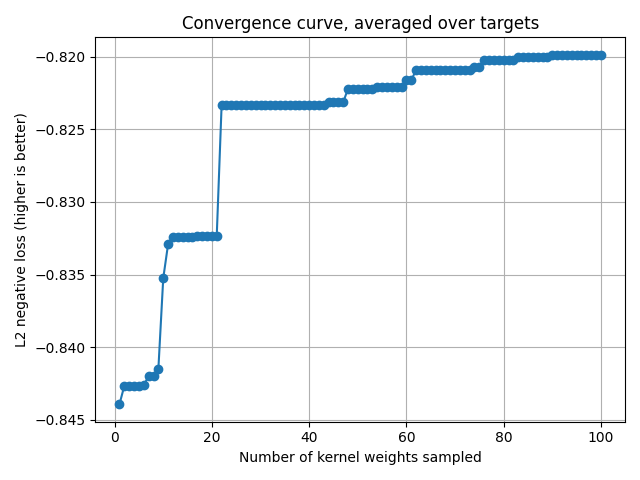

Plot the convergence curve¶

cv_scores gives the scores for each sampled kernel weights.

The convergence curve is thus the current maximum for each target.

current_max = np.maximum.accumulate(cv_scores, axis=0)

mean_current_max = np.mean(current_max, axis=1)

x_array = np.arange(1, len(mean_current_max) + 1)

plt.plot(x_array, mean_current_max, '-o')

plt.grid("on")

plt.xlabel("Number of kernel weights sampled")

plt.ylabel("L2 negative loss (higher is better)")

plt.title("Convergence curve, averaged over targets")

plt.tight_layout()

plt.show()

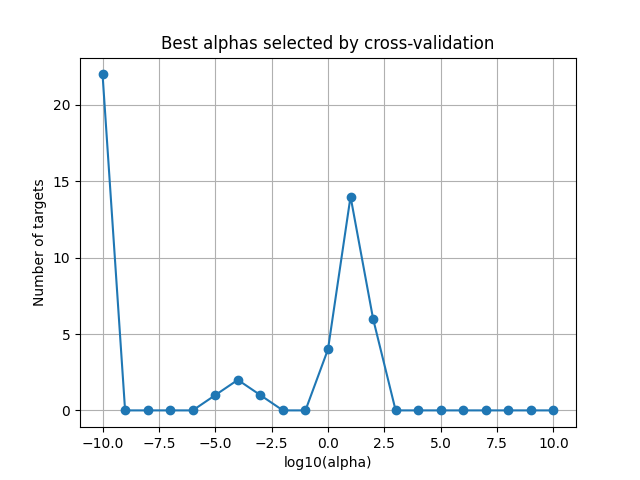

Plot the optimal alphas selected by the solver¶

This plot is helpful to refine the alpha grid if the range is too small or too large.

best_alphas = 1. / np.sum(np.exp(deltas), axis=0)

plot_alphas_diagnostic(best_alphas, alphas)

plt.title("Best alphas selected by cross-validation")

plt.show()

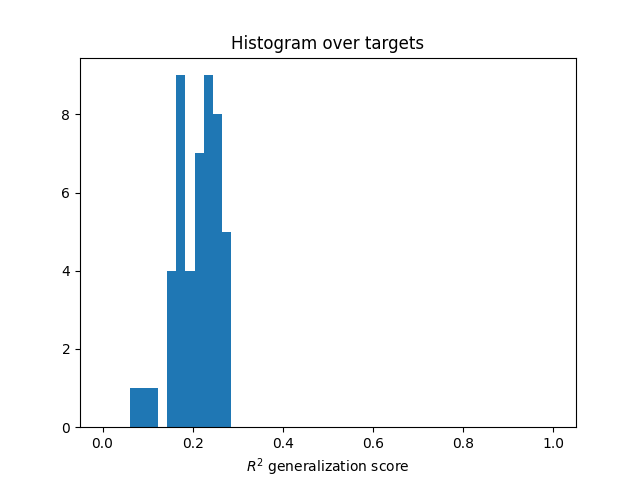

Compute the predictions on the test set¶

(requires the dual weights)

split = False

scores = predict_and_score_weighted_kernel_ridge(

Ks_test, dual_weights, deltas, Y_test, split=split,

n_targets_batch=n_targets_batch, score_func=r2_score_split)

scores = backend.to_numpy(scores)

plt.hist(scores, np.linspace(0, 1, 50))

plt.xlabel(r"$R^2$ generalization score")

plt.title("Histogram over targets")

plt.show()

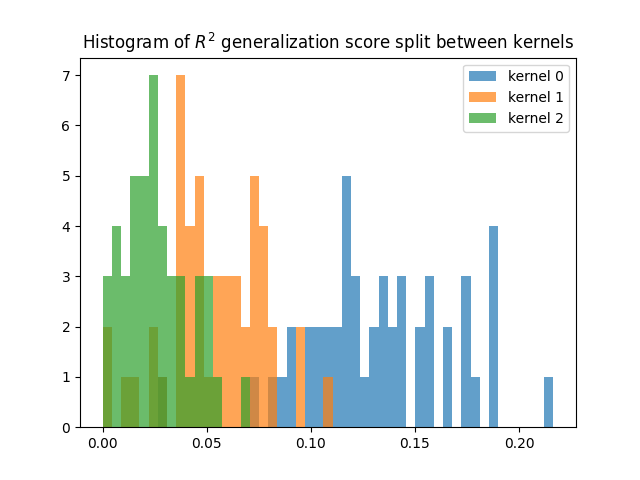

Compute the split predictions on the test set¶

(requires the dual weights)

Here we apply the dual weights on each kernel separately

(exp(deltas[i]) * kernel[i]), and we compute the R2 scores

(corrected for correlations) of each prediction.

split = True

scores_split = predict_and_score_weighted_kernel_ridge(

Ks_test, dual_weights, deltas, Y_test, split=split,

n_targets_batch=n_targets_batch, score_func=r2_score_split)

scores_split = backend.to_numpy(scores_split)

for kk, score in enumerate(scores_split):

plt.hist(score, np.linspace(0, np.max(scores_split), 50), alpha=0.7,

label="kernel %d" % kk)

plt.title(r"Histogram of $R^2$ generalization score split between kernels")

plt.legend()

plt.show()

Total running time of the script: (1 minutes 50.164 seconds)