Vim-2 tutorial#

Note

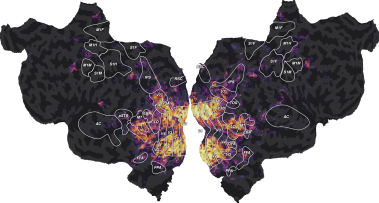

This tutorial is redundant with the Shortclips tutorial. It uses the “vim-2” data set, a data set with brain responses limited to the occipital lobe, and with no mappers to plot the data on flatmaps. Using the “Shortclips tutorial” with full brain responses is recommended.

This tutorial describes how to use the Voxelwise Encoding Model framework in a visual imaging experiment.

Data set#

This tutorial is based on publicly available data published on CRCNS [Nishimoto et al., 2014]. The data is briefly described in the dataset description PDF, and in more details in the original publication [Nishimoto et al., 2011]. If you publish work using this data set, please cite the original publication [Nishimoto et al., 2011], and the CRCNS data set [Nishimoto et al., 2014].

Requirements#

This tutorial requires the following Python packages:

voxelwise_tutorialsand its dependencies (see this page for installation instructions)cupyorpytorch(optional, required to use a GPU backend in himalaya)

References#

S. Nishimoto, A. T. Vu, T. Naselaris, Y. Benjamini, B. Yu, and J. L. Gallant. Reconstructing visual experiences from brain activity evoked by natural movies. Current Biology, 21(19):1641–1646, 2011.

S. Nishimoto, A. T. Vu, T. Naselaris, Y. Benjamini, B. Yu, and J. L. Gallant. Gallant lab natural movie 4t fMRI data. 2014. doi:10.6080/K00Z715X.