Shortclips tutorial#

This tutorial describes how to use the Voxelwise Encoding Model framework in a visual imaging experiment.

Dataset#

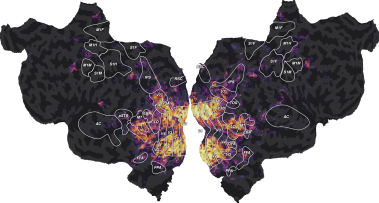

This tutorial is based on publicly available data published on GIN [Huth et al., 2022]. This data set contains BOLD fMRI responses in human subjects viewing a set of natural short movie clips. The functional data were collected in five subjects, in three sessions over three separate days for each subject. Details of the experiment are described in the original publication [Huth et al., 2012]. If you publish work using this data set, please cite the original publications [Huth et al., 2012], and the GIN data set [Huth et al., 2022].

Models#

This tutorial shows and compares analyses based on three different voxelwise encoding models:

A ridge model with wordnet semantic features as described in [Huth et al., 2012].

A ridge model with motion-energy features as described in [Nishimoto et al., 2011].

A banded-ridge model with both feature spaces as described in [Nunez-Elizalde et al., 2019].

Scikit-learn API#

These tutorials use scikit-learn to define the preprocessing steps, the modeling pipeline, and the cross-validation scheme. If you are not familiar with the scikit-learn API, we recommend the getting started guide. We also use a lot of the scikit-learn terminology, which is explained in great details in the glossary of common terms and API elements.

Running time#

Most of these tutorials can be run in a reasonable time with a GPU (under 1 minute for most examples, ~7 minutes for the banded ridge example). Running these examples on a CPU is much slower (typically 10 times slower).

Requirements#

This tutorial requires the following Python packages:

voxelwise_tutorialsand its dependencies (see this page for installation instructions)cupyorpytorch(optional, required to use a GPU backend in himalaya)

References#

A. G. Huth, S. Nishimoto, A. T. Vu, T. Dupré la Tour, and J. L. Gallant. Gallant lab natural short clips 3t fMRI data. 2022. doi:10.12751/g-node.vy1zjd.

A. G. Huth, S. Nishimoto, A. T. Vu, and J. L. Gallant. A continuous semantic space describes the representation of thousands of object and action categories across the human brain. Neuron, 76(6):1210–1224, 2012.

S. Nishimoto, A. T. Vu, T. Naselaris, Y. Benjamini, B. Yu, and J. L. Gallant. Reconstructing visual experiences from brain activity evoked by natural movies. Current Biology, 21(19):1641–1646, 2011.

A. O. Nunez-Elizalde, A. G. Huth, and J. L. Gallant. Voxelwise encoding models with non-spherical multivariate normal priors. Neuroimage, 197:482–492, 2019.