Selected Publications

A map of the cortical functional network mediating naturalistic navigation (Zhang, Meschke, Gallant, bioRxiv preprint, 2025)

December 17, 2025

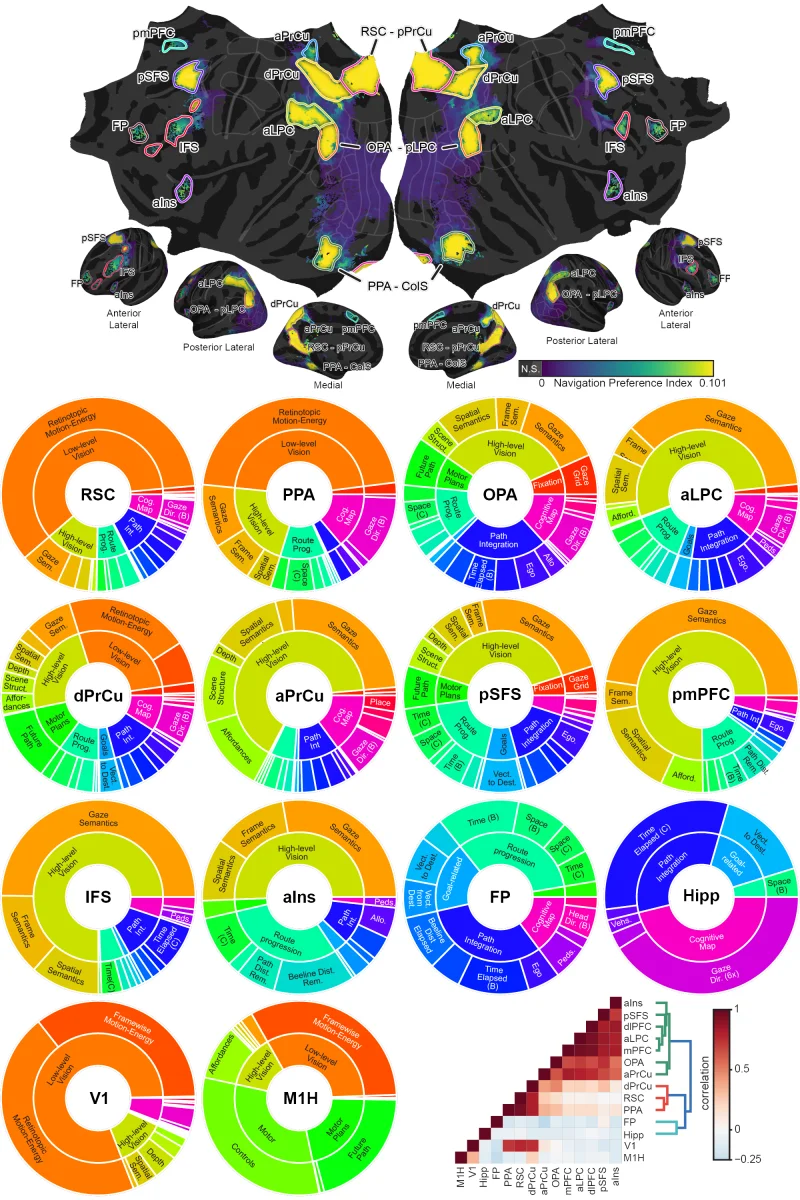

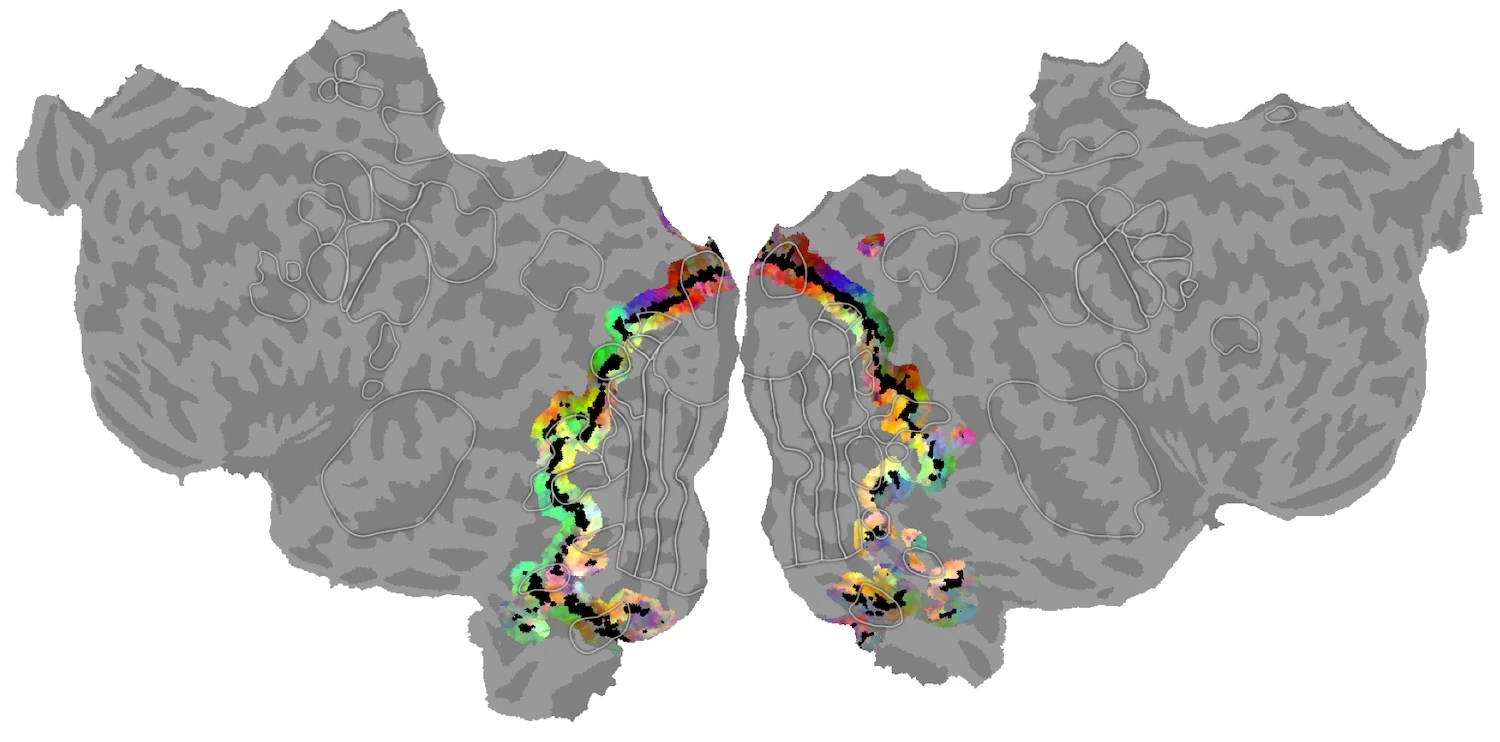

Natural navigation requires close coordination of perception, planning, and motor actions. We used fMRI to record brain activity while participants performed a taxi driver task in VR, then fit high-dimensional voxelwise encoding models to the data. Navigation is supported by a network of 11 functionally distinct cortical regions that transform perceptual inputs through decision-making processes to produce action outputs.

Disentangling Superpositions: Interpretable Brain Encoding Model with Sparse Concept Atoms (Zeng and Gallant, NeurIPS, 2025)

November 13, 2025

Dense ANN word embeddings entangle multiple concepts in each feature, making it difficult to interpret encoding model maps. We use a Sparse Concept Encoding Model to produce a feature space where each dimension corresponds to an interpretable concept. The resulting model matches the prediction performance of dense models while substantially enhancing interpretability.

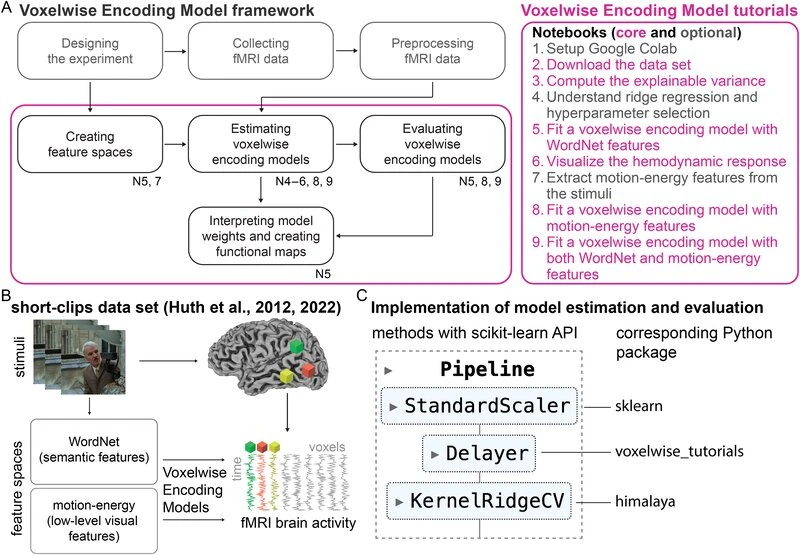

Encoding models in functional magnetic resonance imaging: the Voxelwise Encoding Model framework (Visconti di Oleggio Castello, Deniz, et al., PsyArXiv preprint, 2025)

September 17, 2025

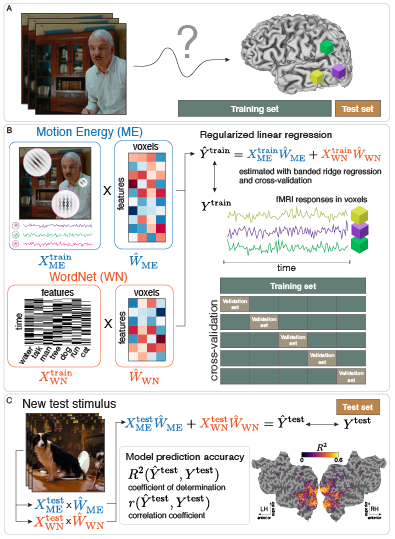

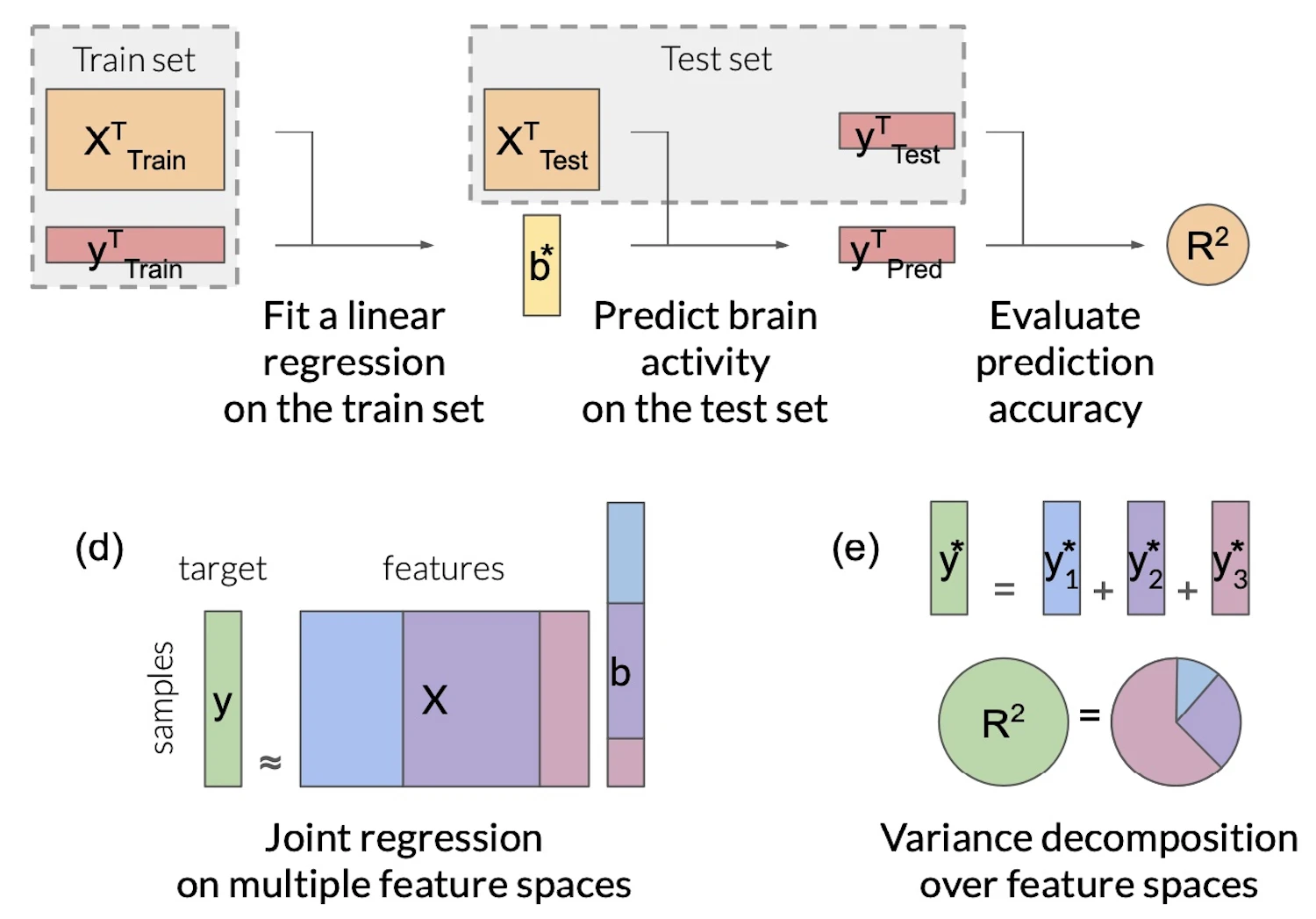

This review paper provides the first comprehensive guide to the Voxelwise Encoding Model (VEM) framework. The VEM framework is a framework for fitting encoding models to fMRI data. This framework is currently the most sensitive and powerful approach available for modeling fMRI data. It can be used to fit dozens of distinct models simultaneously, each model having up to several thousand distinct features. The Voxelwise Encoding Model framework also conforms to all best practices in data science, which maximizes sensitivity, reliability and generalizability of the resulting models.

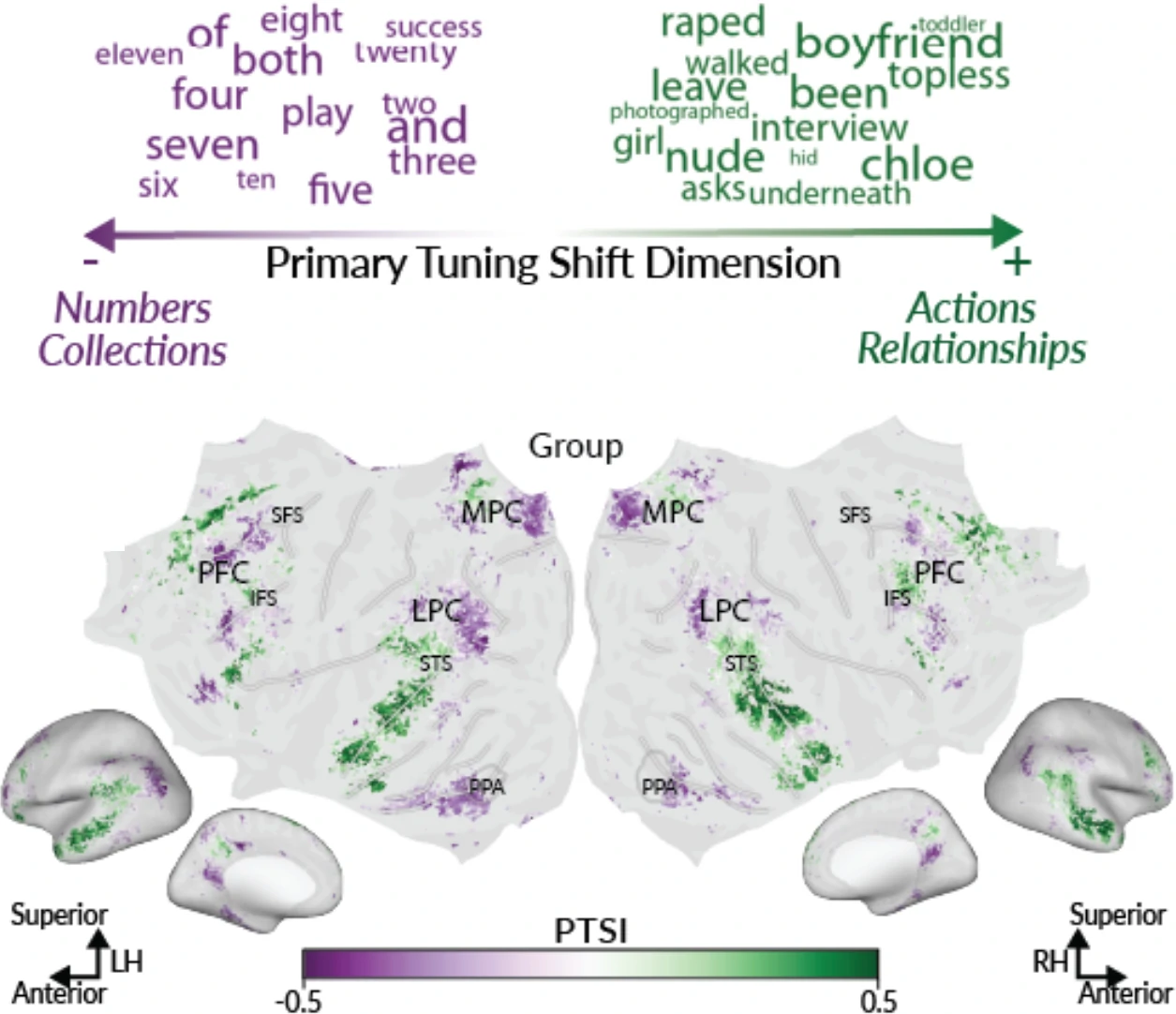

Individual differences shape conceptual representation in the brain (Visconti di Oleggio Castello et al., bioRxiv preprint, 2025)

August 22, 2025

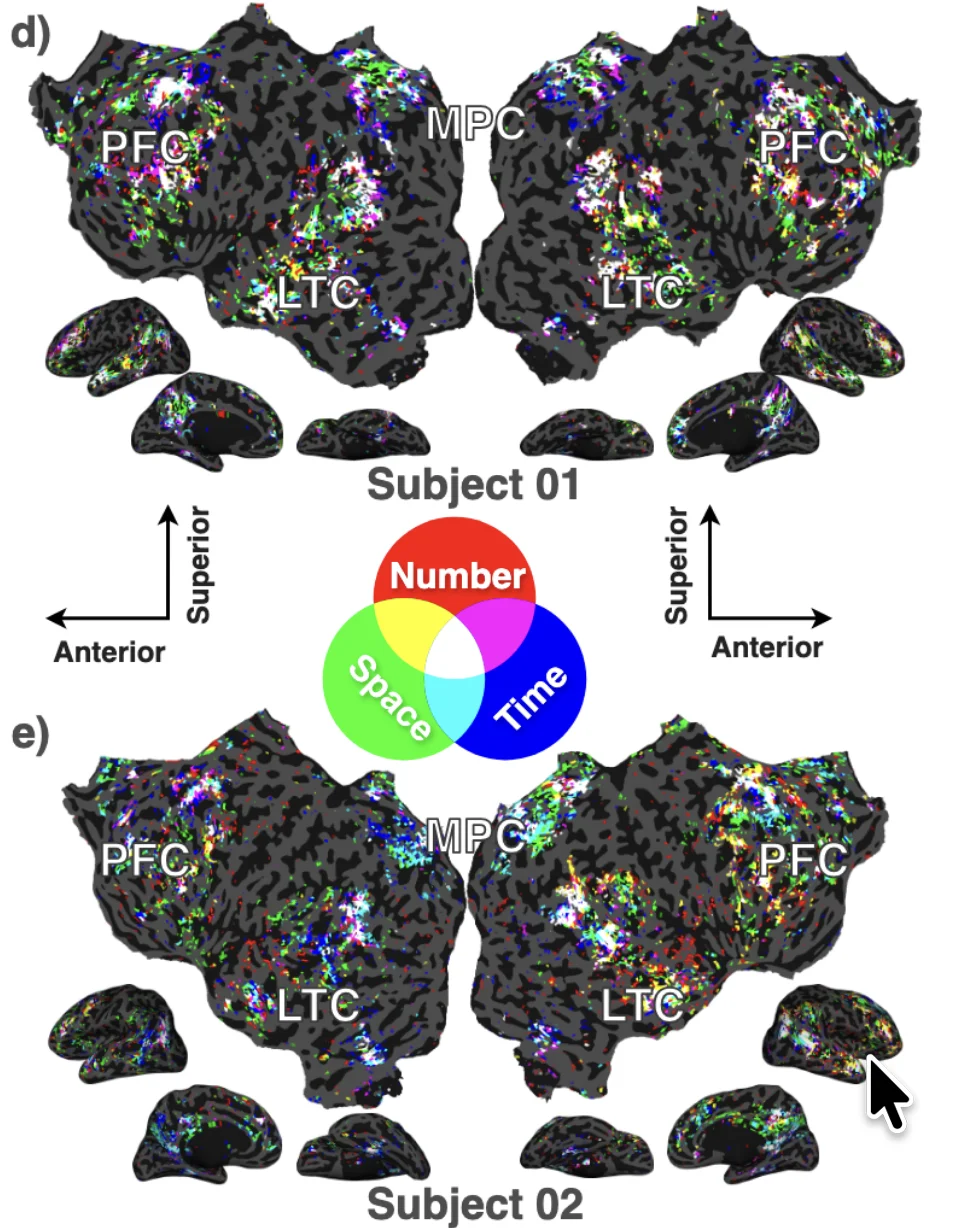

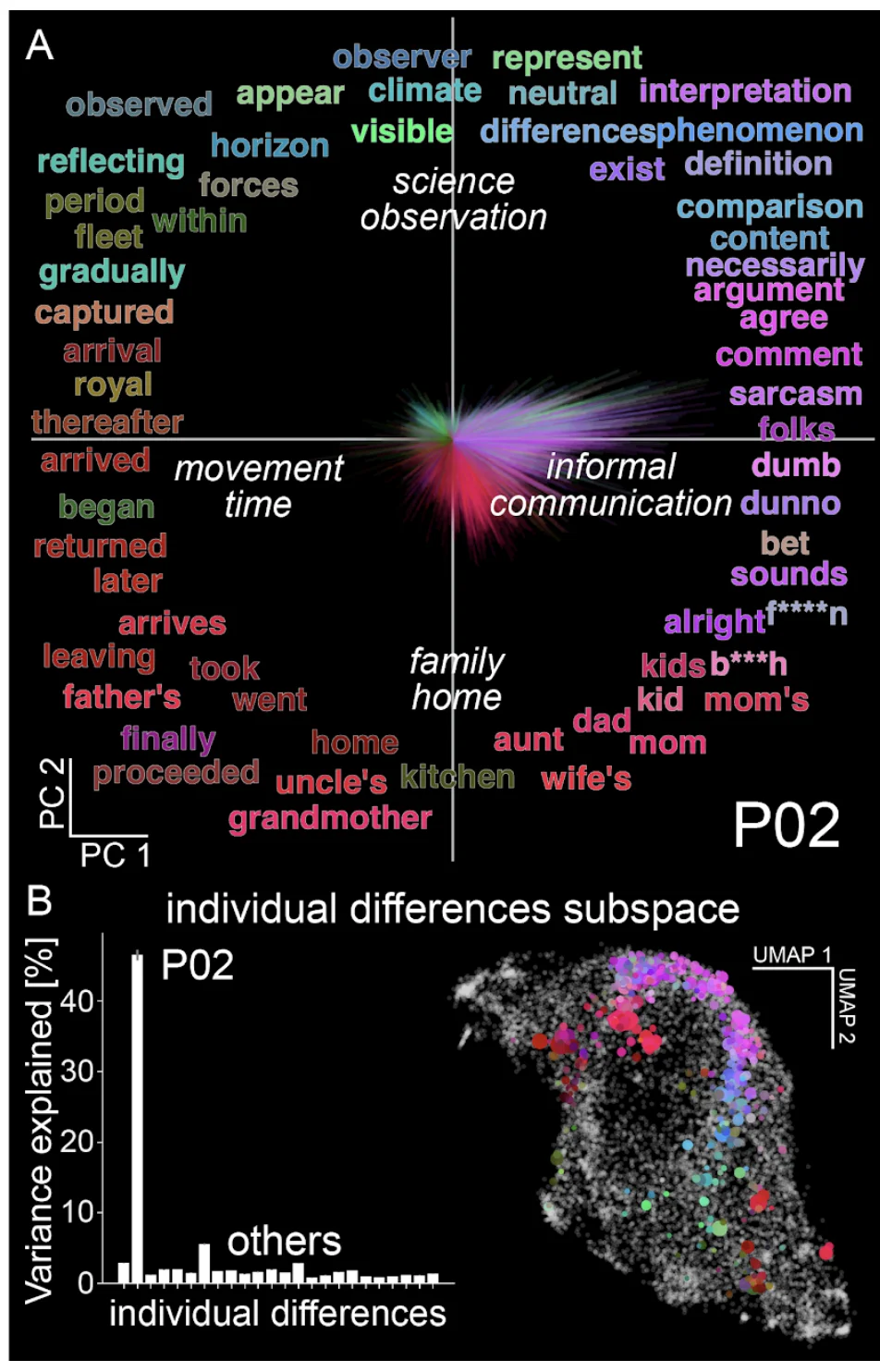

We developed a new computational framework to measure and interpret individual differences in functional brain maps. We found robust individual differences in conceptual representation that reflect cognitive traits unique to each person. This framework enables new precision neuroscience approaches to the study of complex functional representations.

The Voxelwise Encoding Model framework: A tutorial introduction to fitting encoding models to fMRI data (Dupré la Tour et al., Imaging Neuroscience, 2025)

May 9, 2025

This tutorial provides practical guidance on using the Voxelwise Encoding Model (VEM) framework for functional brain mapping. It includes hands-on examples with public datasets, code repositories, and interactive notebooks to make this powerful methodology accessible to researchers at all levels.

Bilingual language processing relies on shared semantic representations that are modulated by each language (Chen et al., bioRxiv preprint, 2024)

November 21, 2024

We performed fMRI scans of English-Chinese bilinguals while they read natural narratives in each language. Semantic representations are largely shared between languages, but finer-grained differences systematically alter how the same meaning is represented. Semantic brain representations in bilinguals are shared across languages but modulated by each language.

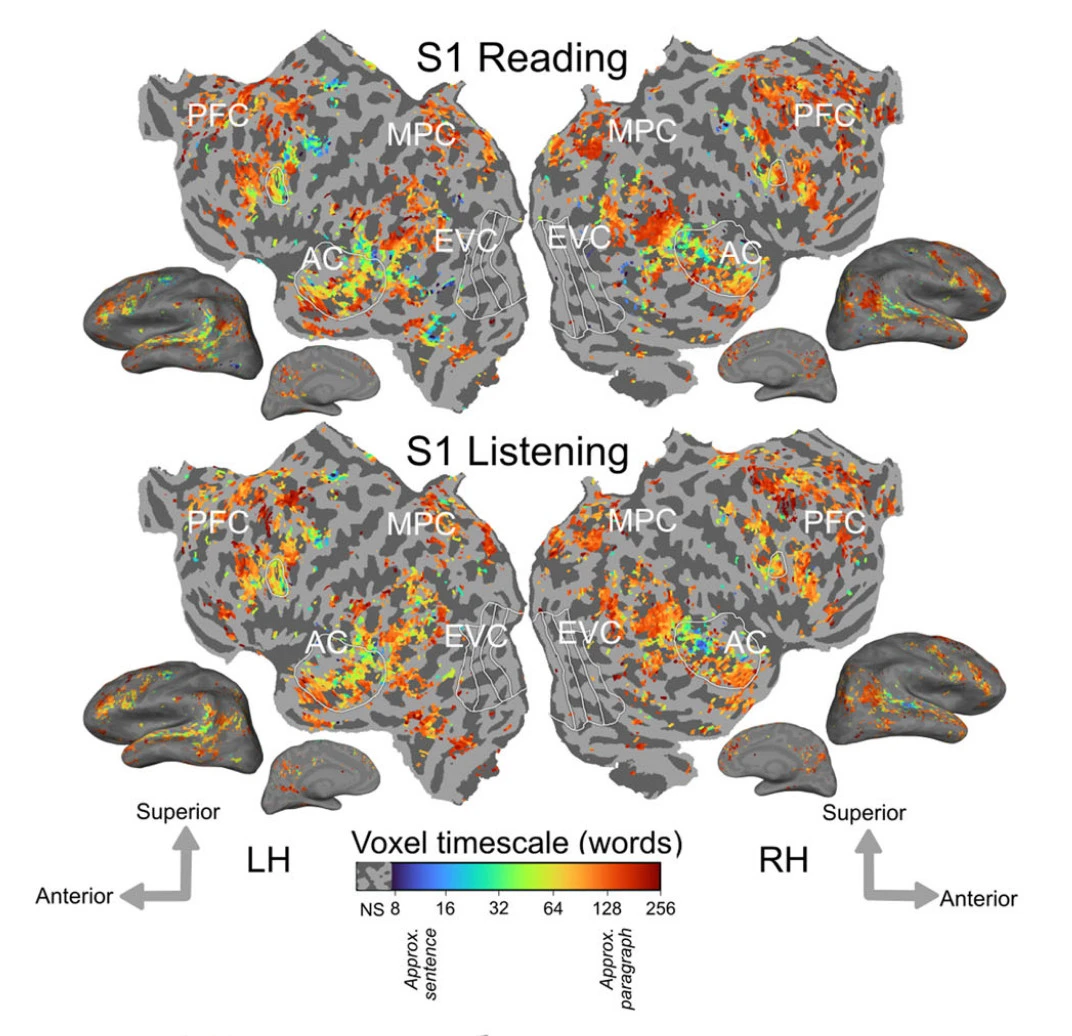

The cortical representation of language timescales is shared between reading and listening (Chen et al., Communications Biology, 2024)

July 1, 2024

Language comprehension involves integrating low-level sensory inputs into a hierarchy of increasingly high-level features. To recover this hierarchy we mapped the intrinsic timescale of language representation across the cerebral cortex during listening and reading. We find that the timescale of representation is organized similarly for the two modalities.

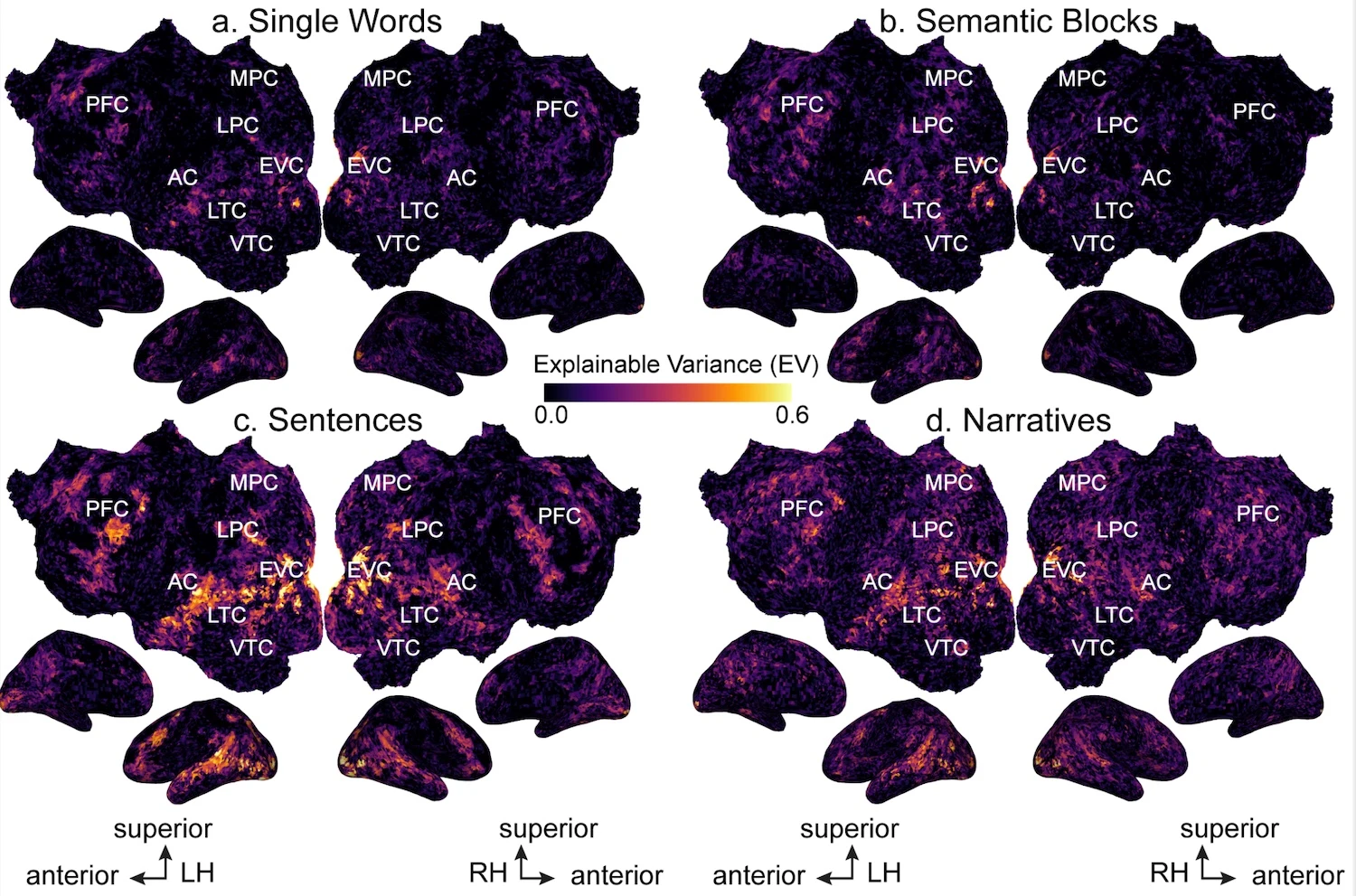

Semantic representations during language comprehension are affected by context (Deniz et al., Journal of Neuroscience, 2023)

April 26, 2023

Most neuroimaging studies of meaning use isolated words and sentences with little context. We find that increasing context improves the quality of neuroimaging data and changes where and how semantic information is represented in the brain. Findings from studies using out-of-context stimuli may not generalize to natural language used in daily life.

Phonemic segmentation of narrative speech in human cerebral cortex (Gong et al., Nature Communications, 2023)

June 29, 2023

This fMRI study identifies the brain representation of single phonemes, diphones, and triphones during natural speech. Many regions in and around auditory cortex represent phonemes, and we identify regions where phonemic processing and lexical retrieval are intertwined. (Collaboration with the Theunissen lab at UCB.)

Feature-space selection with banded ridge regression (Dupré la Tour et al., Neuroimage, 2022)

December 1, 2022

Encoding models identify the information represented in brain recordings, but fitting multiple models simultaneously presents several challenges. This paper describes how banded ridge regression can be used to solve these problems. Furthermore, several methods are proposed to address the computational challenge of fitting banded ridge regressions on large numbers of voxels and feature spaces. All implementations are released in an open-source Python package called Himalaya.

Visual and linguistic semantic representations are aligned at the border of human visual cortex (Popham et al., Nature Neuroscience, 2021)

October 28, 2021

We examined the spatial organization of visual and amodal semantic functional maps. The pattern of semantic selectivity in these two networks corresponds along the boundary of visual cortex: for categories represented posterior to the boundary, the same categories are represented linguistically on the anterior side. These two networks are smoothly joined to form one contiguous map.

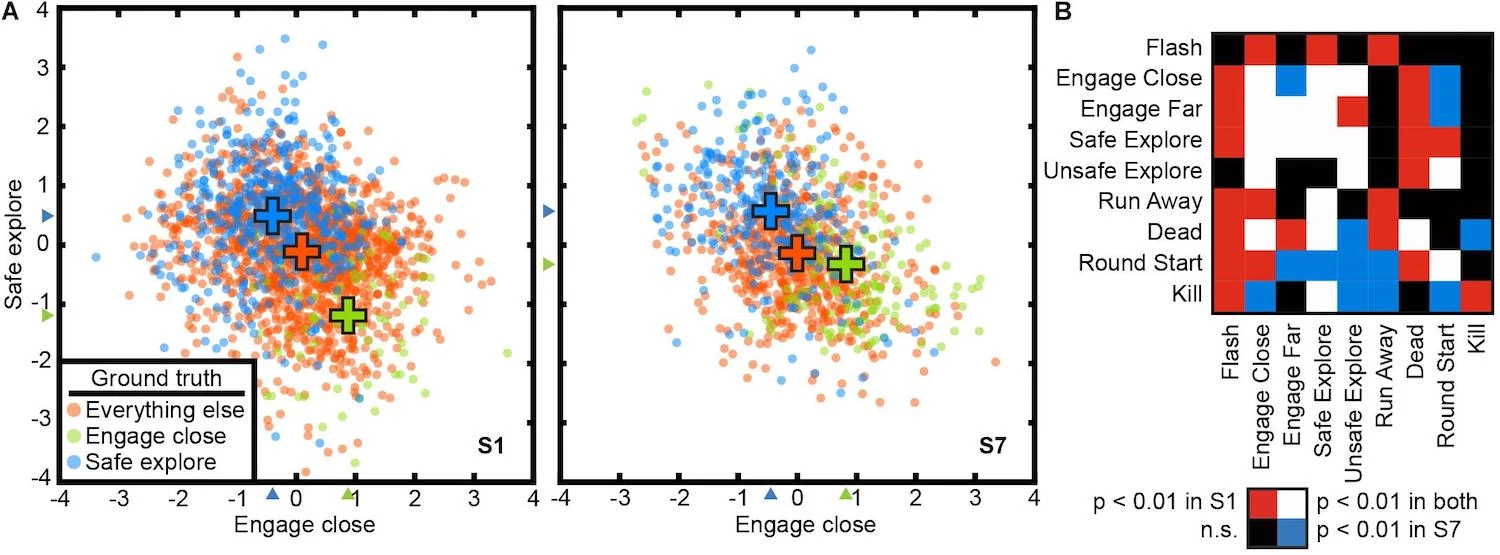

Voxel-based state space modeling recovers task-related cognitive states in naturalistic fMRI experiments (Zhang et al., Front. Neuro., 2021)

May 1, 2021

We present a voxel-based state space modeling method for recovering task-related state spaces from fMRI data. Applied to a visual attention task and a video game task, each task induces distinct brain states that can be embedded in a low-dimensional state space that reflects task parameters.

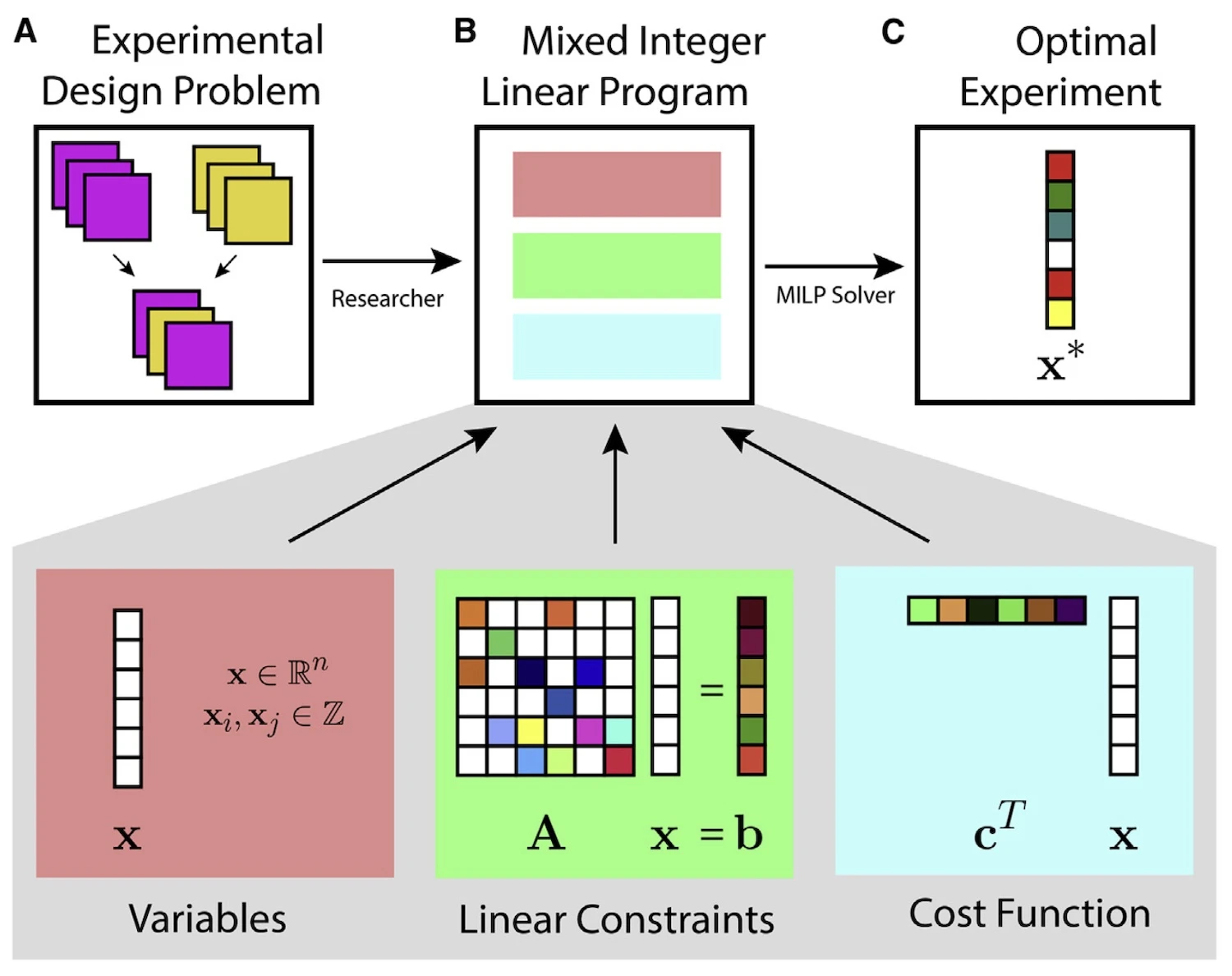

Design of complex neuroscience experiments using mixed-integer linear programming (Slivkoff and Gallant, Neuron, 2021)

May 5, 2021

This tutorial and primer reviews how mixed integer linear programming can be used to optimize the design of complex experiments using many different variables. The approach is particularly useful when designing complex fMRI experiments--such as question answering studies--that aim to manipulate and probe many dimensions simultaneously.

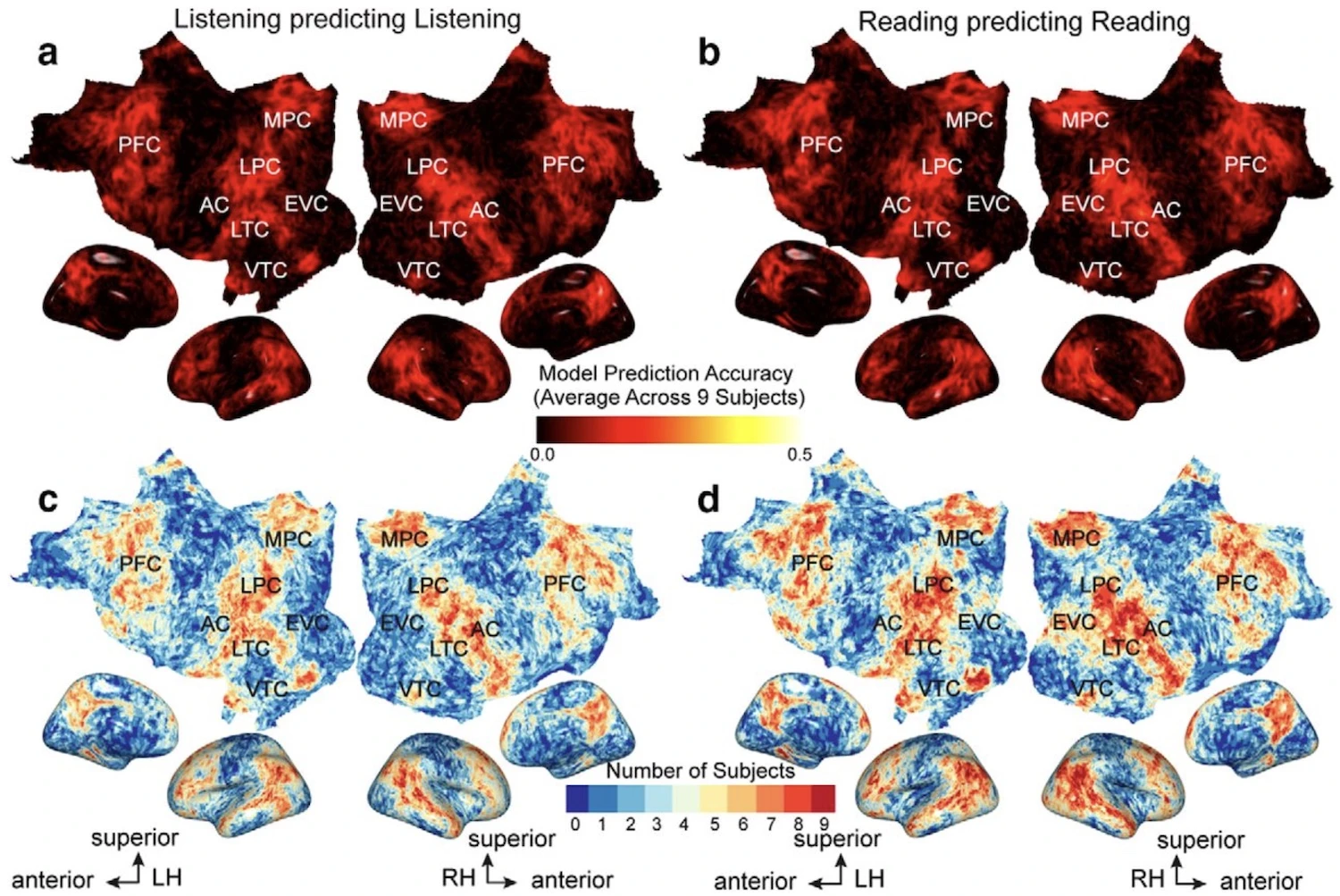

The representation of semantic information across human cerebral cortex during listening versus reading is invariant to stimulus modality (Deniz et al., J. Neurosci., 2019)

September 5, 2019

We show that although the representation of semantic information in the human brain is quite complex, the semantic representations evoked by listening versus reading are almost identical. The representation of language semantics is independent of the sensory modality through which the semantic information is received.

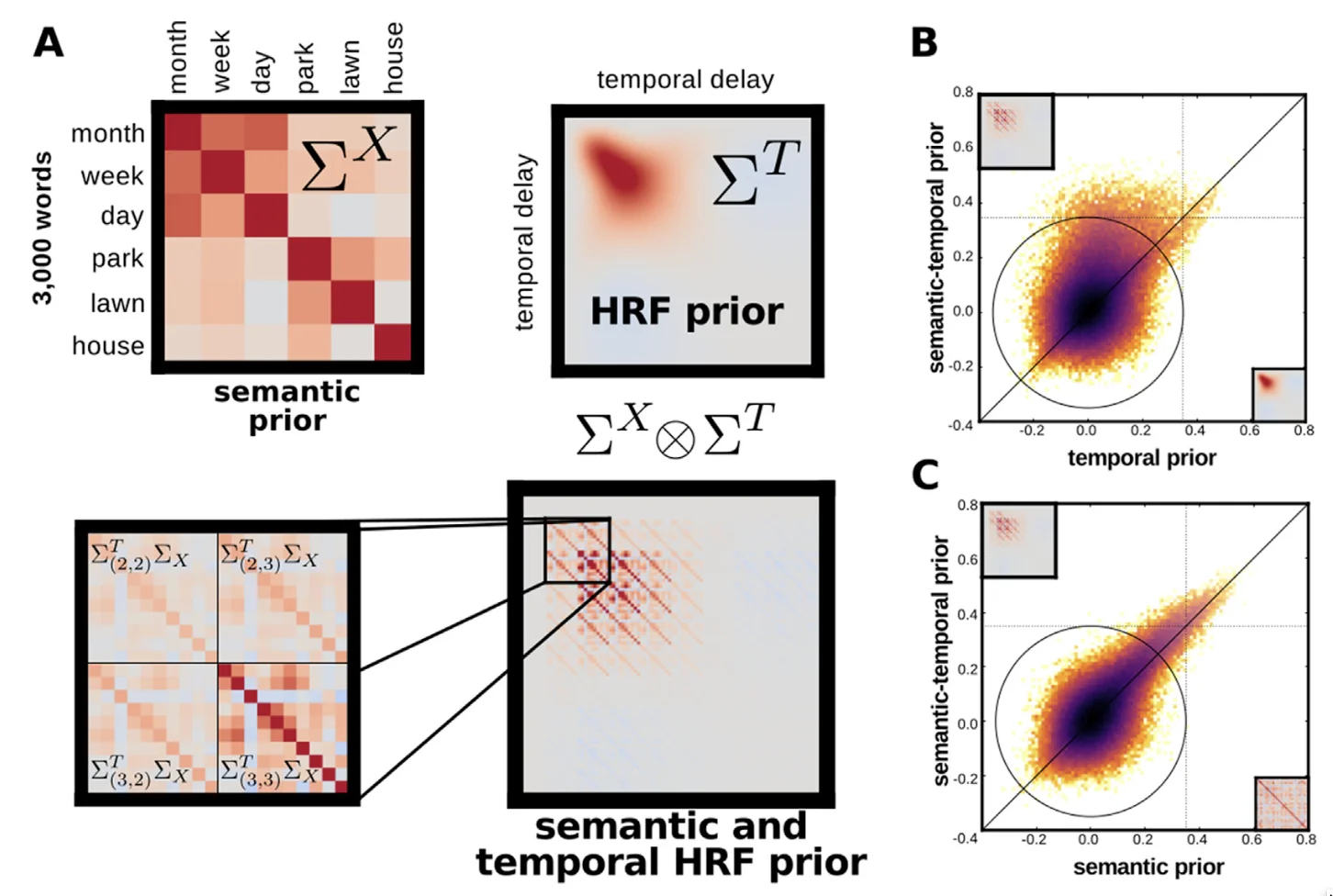

Voxelwise encoding models with non-spherical multivariate normal priors (Nunez-Elizalde, Huth & Gallant, NeuroImage, 2019)

August 15, 2019

Ridge regression assumes a spherical Gaussian prior with equal variance for all model parameters, but this is not always appropriate. This paper shows how non-spherical priors via Tikhonov regression can improve encoding models. A key application is banded ridge regression, which assigns a separate regularization parameter to each feature space and provides substantially better prediction accuracy when combining multiple feature spaces.

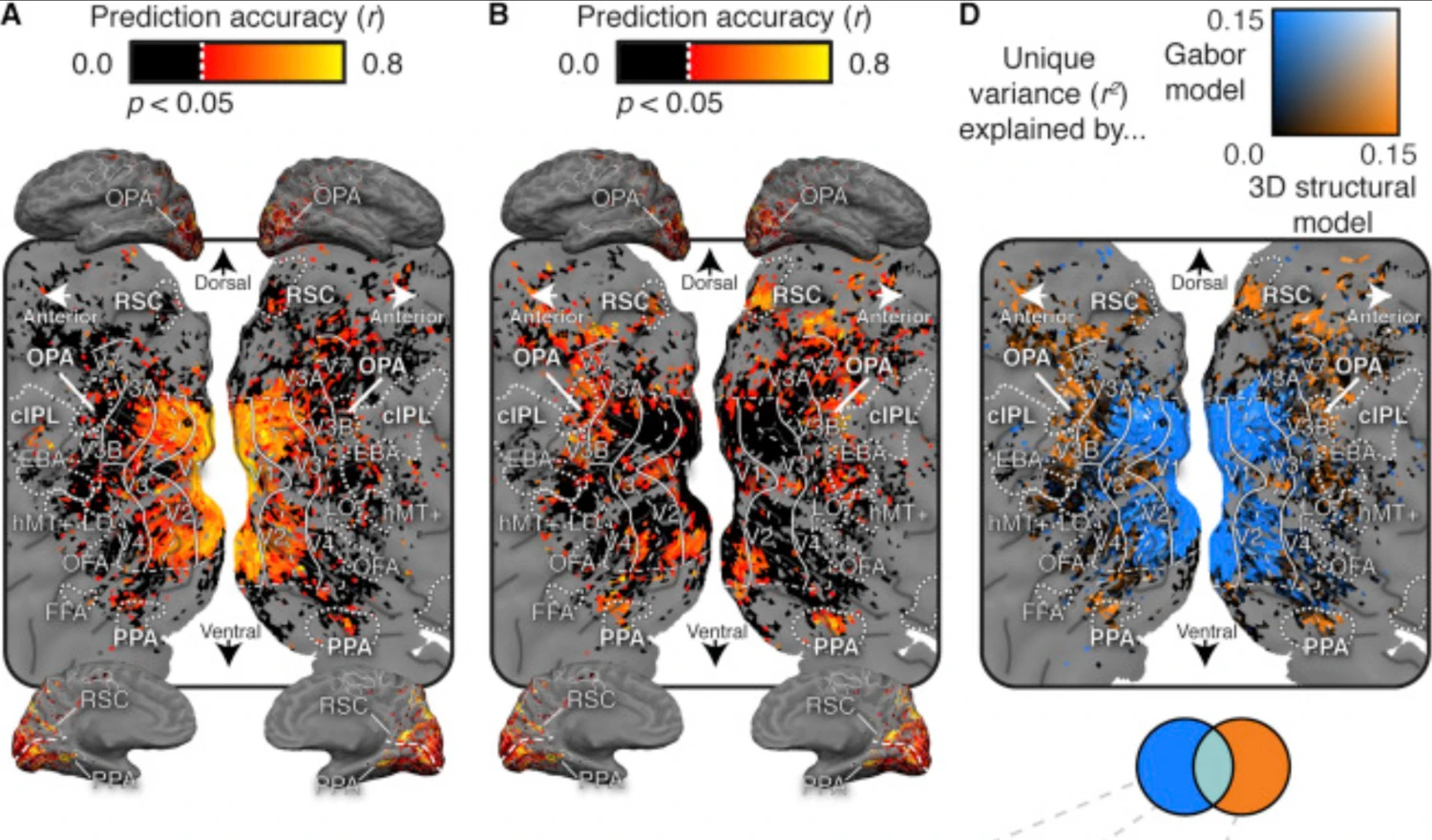

Human scene-selective areas represent 3D configurations of surfaces (Lescroart et al., Neuron, 2019)

January 2, 2019

It has been argued that scene-selective areas in the human brain represent both the 3D structure of the local visual environment and low-level 2D features that provide cues for 3D structure. To evaluate these hypotheses we developed an encoding model of 3D scene structure and tested it against a model of low-level 2D features. We fit the models to fMRI data recorded while subjects viewed visual scenes. Scene-selective areas represent the distance to and orientation of large surfaces. The most important dimensions of 3D structure are distance and openness.

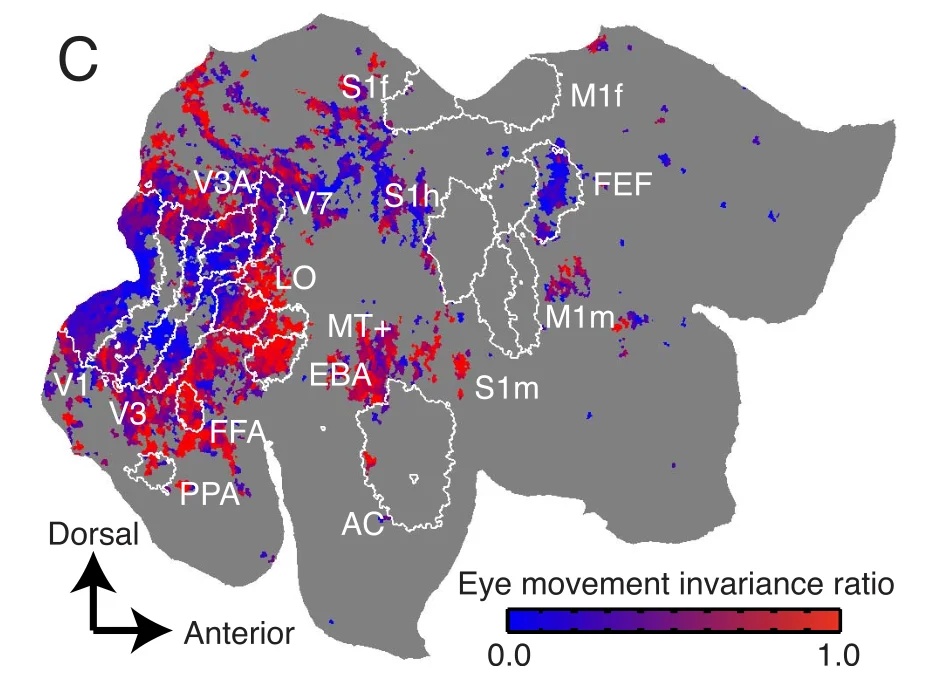

Eye movement-invariant representations in the human visual system (Nishimoto, Huth, Bilenko & Gallant, Journal of Vision, 2017)

January 1, 2017

Visual representations must be robust to eye movements, but the degree of eye movement invariance across the visual hierarchy is not well understood. We used fMRI to compare brain activity while subjects watched natural movies during fixation and free viewing. Responses in ventral temporal areas are largely invariant to eye movements, while early visual areas are strongly affected. These results suggest that the ventral temporal areas maintain a stable representation of the visual world during natural vision.

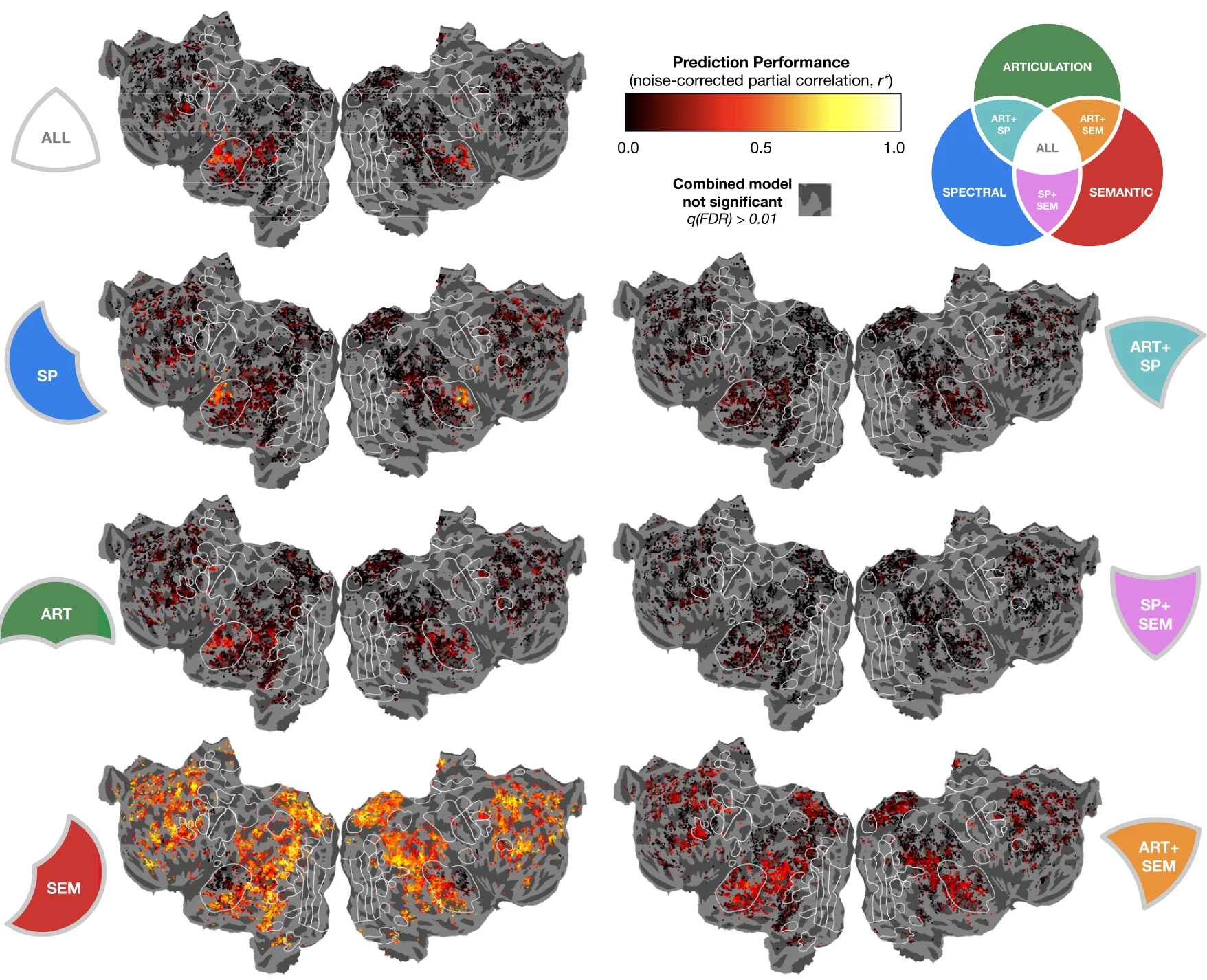

The hierarchical cortical organization of human speech processing (de Heer, Huth, Griffiths, Gallant & Theunissen, J. Neurosci., 2017)

July 5, 2017

We used voxelwise encoding models and variance partitioning to investigate how the brain transforms speech sounds into meaning. Speech processing involves a cortical hierarchy: spectral features in A1, articulatory features in STG, and semantic features in STS and beyond. Both hemispheres are equally involved, and semantic representations appear surprisingly early in the hierarchy.

Natural speech reveals the semantic maps that tile human cerebral cortex (Huth et al., Nature, 2016)

April 27, 2016

We collected fMRI while subjects listened to narrative stories and recovered detailed lexical-semantic maps by voxelwise modeling. The semantic system is organized into intricate patterns that are consistent across individuals. Most areas represent information about groups of related concepts, and our semantic atlas shows which concepts are represented in each area.

For a complete list of publications, visit our Google Scholar page.